GitHub Copilot Paid Models Comparison

Categories:

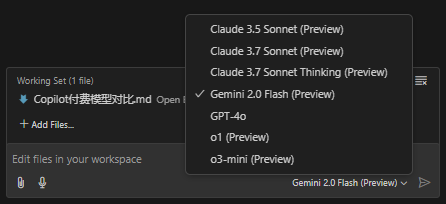

GitHub Copilot currently offers 7 models:

- Claude 3.5 Sonnet

- Claude 3.7 Sonnet

- Claude 3.7 Sonnet Thinking

- Gemini 2.0 Flash

- GPT-4o

- o1

- o3-mini

The official documentation lacks an introduction to these seven models. This post briefly describes their ratings across various domains to highlight their specific strengths, helping readers switch to the most suitable model when tackling particular problems.

Model Comparison

Multi-dimensional comparison table based on publicly available evaluation data (some figures are estimates or adjusted from multiple sources), covering three key metrics: coding (SWE-Bench Verified), math (AIME’24), and reasoning (GPQA Diamond).

| Model | Coding Performance (SWE-Bench Verified) |

Math Performance (AIME'24) |

Reasoning Performance (GPQA Diamond) |

|---|---|---|---|

| Claude 3.5 Sonnet | 70.3% | 49.0% | 77.0% |

| Claude 3.7 Sonnet (Standard) | ≈83.7% (↑ ≈19%) |

≈58.3% (↑ ≈19%) |

≈91.6% (↑ ≈19%) |

| Claude 3.7 Sonnet Thinking | ≈83.7% (≈ same as standard) |

≈64.0% (improved further) |

≈95.0% (stronger reasoning) |

| Gemini 2.0 Flash | ≈65.0% (estimated) |

≈45.0% (estimated) |

≈75.0% (estimated) |

| GPT-4o | 38.0% | 36.7% | 71.4% |

| o1 | 48.9% | 83.3% | 78.0% |

| o3-mini | 49.3% | 87.3% | 79.7% |

Notes:

- Values above come partly from public benchmarks (e.g., Vellum’s comparison report at VELLUM.AI) and partly from cross-platform estimates (e.g., Claude 3.7 is roughly 19% better than 3.5); Gemini 2.0 Flash figures are approximated.

- “Claude 3.7 Sonnet Thinking” refers to inference when “thinking mode” (extended internal reasoning steps) is on, yielding notable gains in mathematics and reasoning tasks.

Strengths, Weaknesses, and Application Areas

Claude family (3.5/3.7 Sonnet and its Thinking variant)

Strengths:

- High accuracy in coding and multi-step reasoning—3.7 significantly improves over 3.5.

- Math and reasoning results are further boosted under “Thinking” mode; well-suited for complex logic or tasks needing detailed planning.

- Advantage in tool-use and long-context handling.

Weaknesses:

- Standard mode math scores are lower; only extended reasoning produces major gains.

- Higher cost and latency in certain scenarios.

Applicable domains: Software engineering, code generation & debugging, complex problem solving, multi-step decision-making, and enterprise-level automation workflows.

Gemini 2.0 Flash

Strengths:

- Large context window for long documents and multimodal input (e.g., image parsing).

- Competitive reasoning & coding results in some tests, with fast response times.

Weaknesses:

- May “stall” in complex coding scenarios; stability needs more validation.

- Several metrics are preliminary estimates; overall performance awaits further public data.

Applicable domains: Multimodal tasks, real-time interactions, and applications requiring large contexts—e.g., long-document summarization, video analytics, and information retrieval.

GPT-4o

Strengths:

- Natural and fluent language understanding/generation—ideal for open-ended dialogue and general text processing.

Weaknesses:

- Weaker on specialized tasks like coding and math; some scores are substantially below comparable models.

- Higher cost (similar to GPT-4.5) yields lower value compared to some competitors.

Applicable domains: General chat systems, content creation, copywriting, and everyday Q&A tasks.

o1 and o3-mini (OpenAI family)

Strengths:

- Excellent mathematical reasoning—o1 and o3-mini score 83.3% and 87.3% on AIME-like tasks, respectively.

- Stable reasoning ability, suited for scenarios demanding high-precision math and logical analysis.

Weaknesses:

- Mid-tier coding performance, slightly behind the Claude family.

- Overall capabilities are somewhat unbalanced across tasks.

Applicable domains: Scientific computation, math problem solving, logical reasoning, educational tutoring, and professional data analysis.